This is one of the most confusing questions out there. Folks use these interchangeably without really knowing what is what. Definitions first.

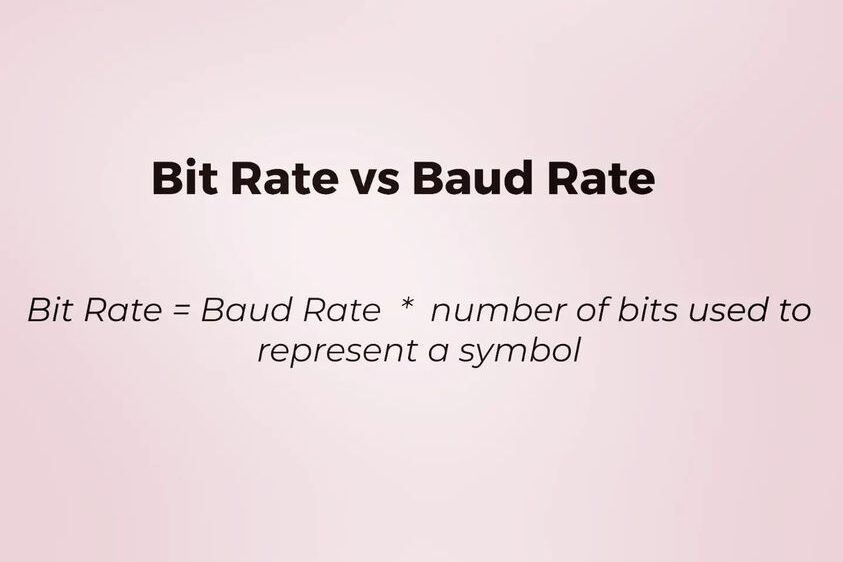

Bit Rate means the number of bits transmitted per sec.

Baud Rate means the number of symbols transmitted per sec.

Now, what the heck is a symbol? The symbol is just a way to wrap information. One signal block can be represented by let’s say 2bits, 5 bits, 6 bits or 32bits depending on how the receiver and transmitter agree. Think of this like a secret communication protocol which you and your friend use to communicate.

Electrically let’s say you want to send 4 voltage levels from 0-3V. I will use 0V – 00, 1V – 01, 2V – 10, 3V – 11. Here each information symbol is encoded by 2 bits. So now if I transmit 1 symbol per second, my baud rate is 1 but my bit rate is 2 bits per second as each symbol contains 2 bits.

Now, where does all the confusion arise from? In let’s say serial communication between embedded devices, you use binary signalling, meaning 1 symbol = 1 bit(either 0 or 1). In this case, the baud rate is always the same as the bit rate. This is one of the reasons you see baud rates and bit rates used interchangeably. Take UART, usually on Windows, the default bit rate is kept as 9600, which is equivalent to 9600 bits per sec. This means 1 bit has a time length of 1/9600 = 104us.

But now to confuse this further, this is not the actual data transfer because UART needs a start bit and a stop bit for every 8bits of actual data. For every 8bit data takes 104us *10 = 1.04ms to transfer. This is the UART protocol overhead. So actual meaningful information bit rate(avoiding protocols) = 8bits every 1.04ms = 7692 bits per second.

Hoping this can clear up most doubts out there on the topic.

0 Comments

Comments are closed.