Last week, I was in conversation with a friend about logistics and machinery tracking for a project, and the technologies enabling it. One tech that was talked about was Bluetooth’s PAwR, Periodic Advertising with Responses. It’s worth discussing today.

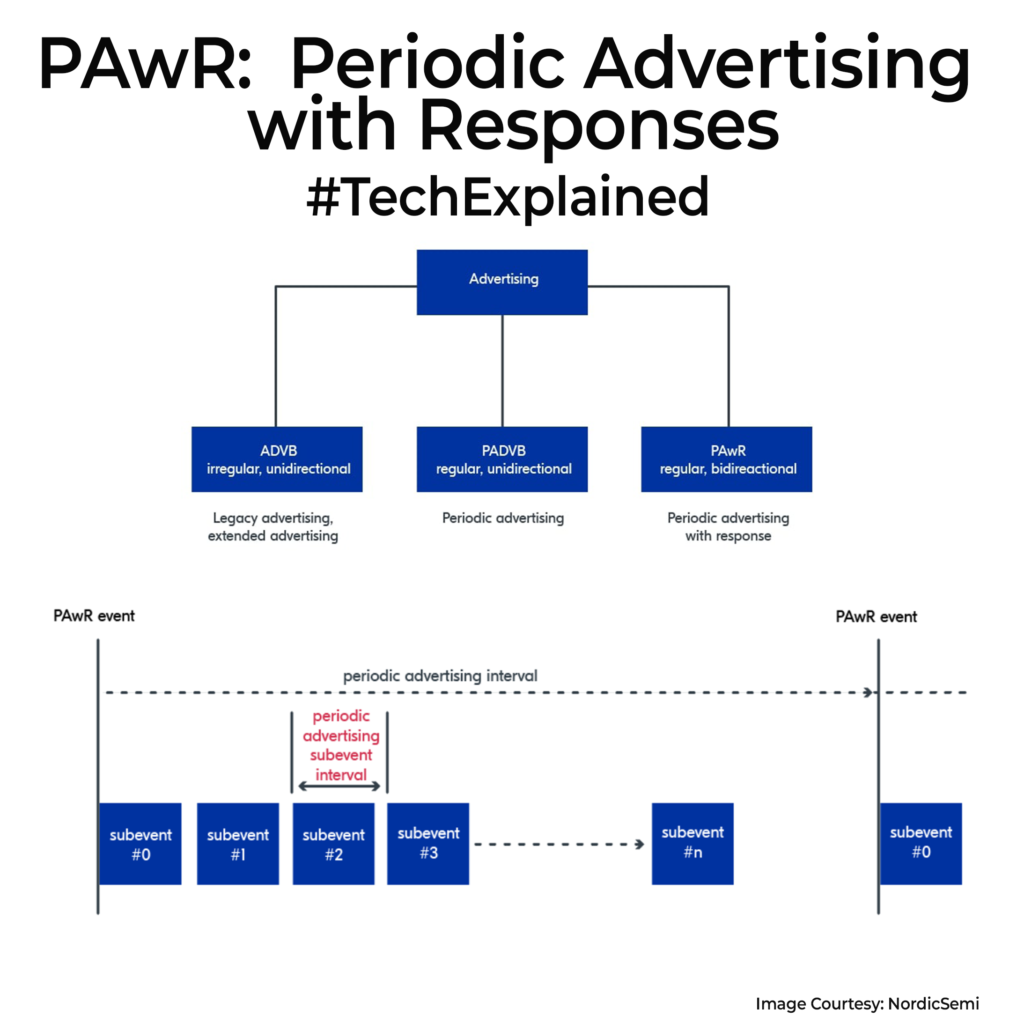

Bluetooth 5.4 introduced PAwR as a new way of enabling two-way communication between a single hub and thousands of low-power tags or sensors. These devices can communicate without having to establish individual connections, saving significant battery power. Earlier BLE standards had issues with large numbers and power efficiency. Older Bluetooth advertising was one-way. BLE 5.0 improved on this with extended advertising, but communication was always one-way. PAwR made bidirectional communication possible and standardized.

How does it work? The central hub sends periodic events. Each event contains several subevents with specific response slots. The tags/observers know exactly when to wake up, listen, and respond. This structured approach allows devices to save battery life dramatically. It can stay asleep except needed. This structured scheduling is similar TDMA (Time Division Multiple Access) used in telecom systems, where devices communicate in pre-allocated time slots. With PAwR, it’s a one to many network, with thousands of devices that can interact efficiently without establishing individual connections, ideal for scenarios like electronic shelf labels (ESLs) in retail or industrial sensor networks.

PAwR isn’t designed for real-time responses(Need to wait for the slots). Instead, it’s designed predictable battery life and scalable management of thousands of nodes. Messages are short, with larger transfers handled via temporary connections only as needed.

Using PAwR, a simple device waking briefly every 1.6 seconds can run for five full years on a tiny coin-cell battery, totalling just 8 hours of active communication over that entire span. This makes it ideal for large-scale deployments such as electronic shelf labels, price tags, and physical asset tracking.